Inside the Core Node: How OptimAI Builds an Efficient, Scalable Data Network for Agentic Intelligence

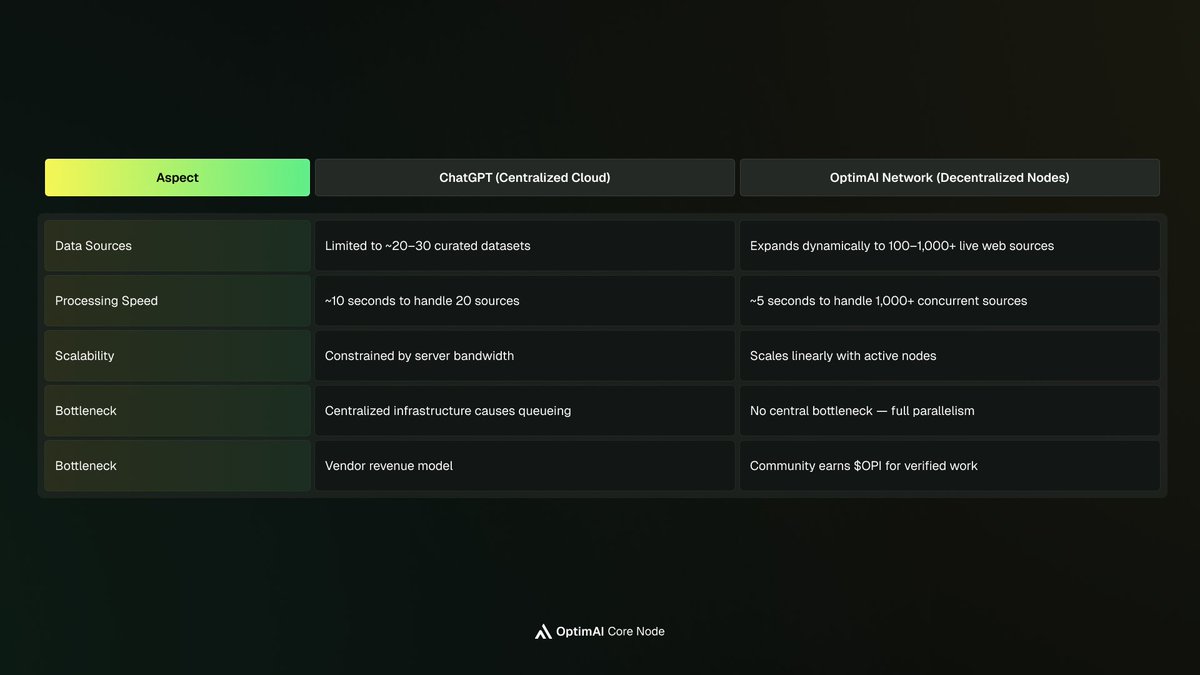

In traditional AI systems, all intelligence lives inside giant centralized data centers - expensive to run, slow to update, and limited to the data they’re allowed to see.OptimAI Network takes a different path. Instead of one monolithic AI brain in the cloud, it distributes intelligence across thousands of Core Nodes around the world.Each Core Node is a mini data center that can see, understand, and share information with the network.

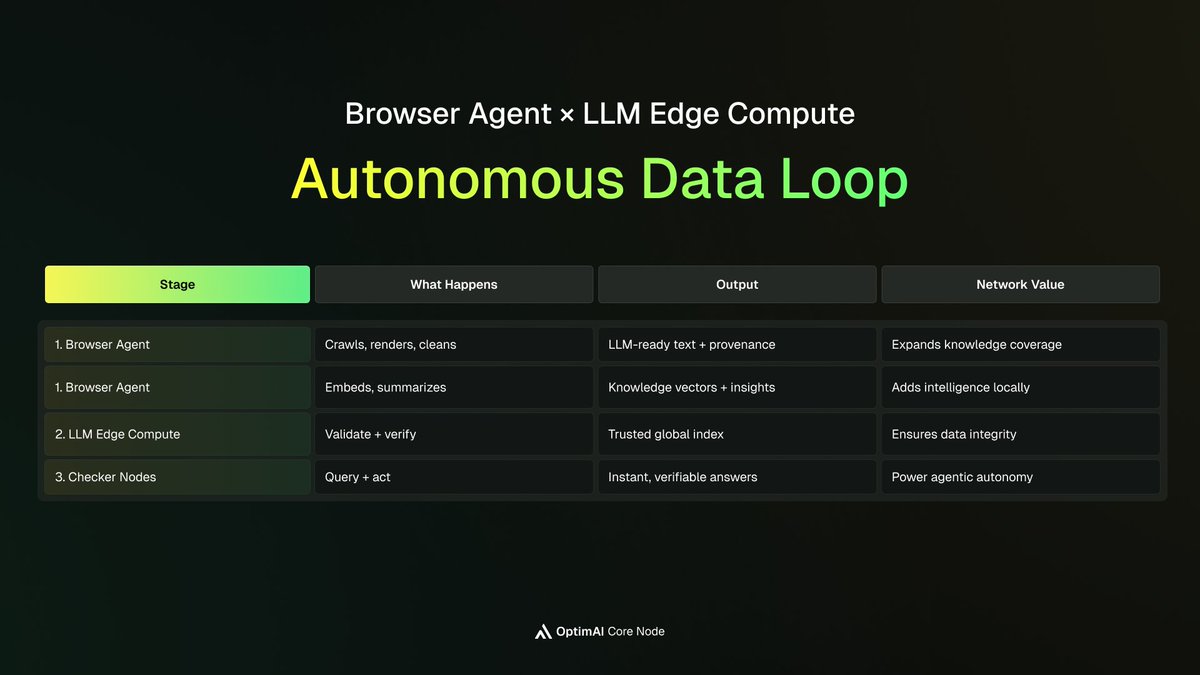

- The Browser Agent is how the node sees - crawling, rendering, and cleaning web data.

- The LLM Edge Compute is how it understands - embedding, summarizing, and packaging that data into structured knowledge.

Together, these components make the OptimAI Network more intelligent, more adaptive, and far more efficient than traditional cloud AI.

1. The Browser Agent: The Network’s Eyes

What It Does

- Accesses and renders public web pages, even dynamic JavaScript-heavy ones.

- Cleans, normalizes, and transforms messy HTML into structured, LLM-ready text chunks.

- Adds provenance — every chunk carries its source URL, timestamp, and hash signature.

- Uses differential crawling to detect changes and update only what’s new.

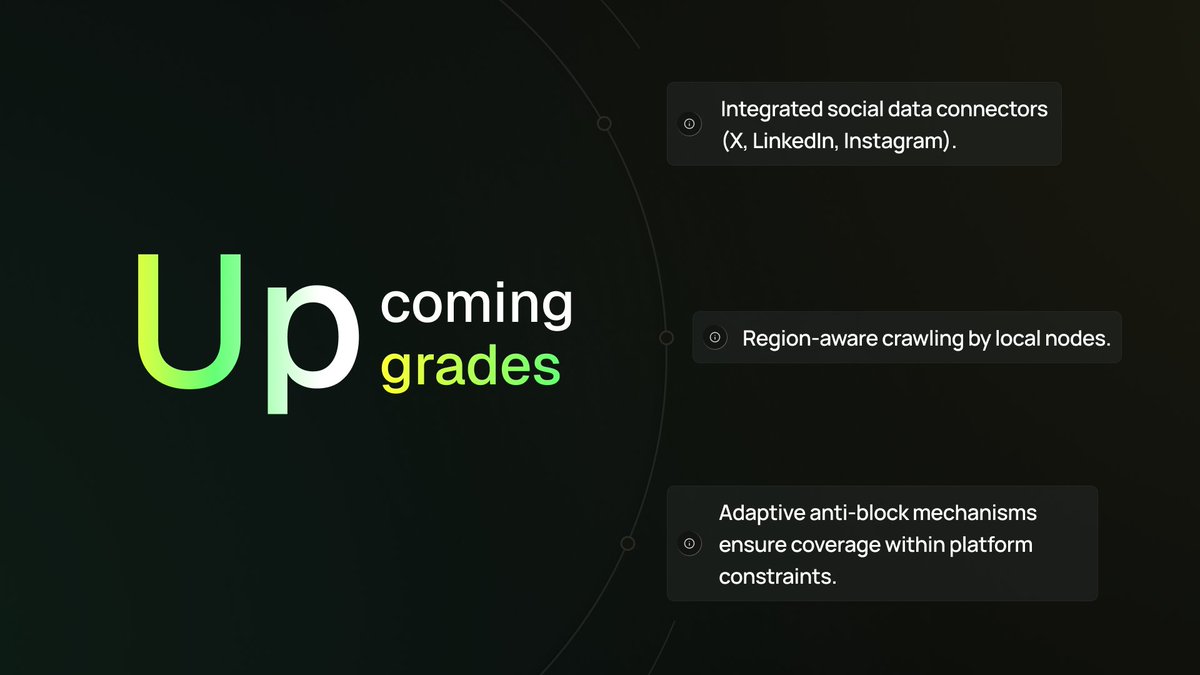

Upcoming Upgrades

- Built-in connectors for X, LinkedIn, Instagram, and other public social data (within policy).

- Geo-localized crawling — nodes focus on their own regions or language domains.

- Adaptive anti-block strategies that respect platform limits while maintaining global coverage.

Why It Matters

Each Browser Agent expands the collective data frontier — capturing selective, diverse, and more accurate information through high-concurrency crawling.When thousands of nodes each gather a small piece of the internet, the network sees far more of the world — constantly refreshed and verifiable.

“Every Core Node is a window into a different corner of the internet.”

2. The LLM Edge Compute — The Network’s Brain

What It Does

- Runs local preprocessing, embedding, and summarization on incoming data.

- Uses open-source or quantized small language models for on-device reasoning.

- Performs entity extraction and semantic chunking, turning unstructured text into searchable knowledge vectors.

- Compresses context before uploading to save bandwidth and boost inference speed.

Why It MattersThis is where OptimAI pushes intelligence to the edge.Instead of sending raw text to a cloud server, nodes process it locally.Only compact embeddings or summaries are shared — smaller payloads, lower latency, and adaptive intelligence that lives closer to the data source.

“In the cloud, you send data to think. In OptimAI, thinking happens where the data lives.”

3. Browser Agent × LLM Edge Compute = Autonomous Data Loop

This closed loop means OptimAI is always learning — not retrained monthly in the cloud, but updated continuously through the network.

4. A More Intelligent, Adaptive, and Efficient Network

OptimAI’s Core Node network redefines how intelligence operates — distributing processing, knowledge, and validation across thousands of community-powered nodes.Each node contributes specialized insights, processes data locally, and earns rewards for real work, creating a self-improving system that’s responsive, transparent, and sustainable.Instead of relying on massive cloud infrastructure, OptimAI turns global collaboration into computational intelligence — forming a network that grows smarter with every participant.

“Where the cloud slows down under scale, OptimAI accelerates through collaboration.”

5. The Foundation of Agentic AI

By distributing both data access and data understanding to the edge, OptimAI transforms how AI learns and operates.Agents no longer rely on outdated, centralized servers.They tap into a living, evolving network of Core Nodes — always learning, always updating, always accessible.And because the system rewards contributors directly, the network naturally scales itself — creating a global intelligence infrastructure that’s more connected, adaptive, and resilient than any centralized cloud.

“Core Nodes don’t just process data — they make intelligence decentralized.”